The Three Jobs That Will Survive and Thrive In an AI-Dominated World

How thinking of AI as a tool (not a mind) shows us where humans will add the most value

Over the next five years, the global workforce is set for profound change. A 2023 Goldman Sachs report estimates that 300 million jobs worldwide could be impacted by generative AI, with up to 25% of work tasks in advanced economies exposed to automation.[1] Similarly, the World Economic Forum’s 2023 Future of Jobs Report predicts that by 2027, 83 million jobs may disappear due to technology — even as 69 million new roles emerge.[2]

Such rapid change is understandably scary, especially for people worried they will lose their job. And on a deeper level, it can feel like an existential threat – imagine that the skills you have spent a lifetime developing suddenly become obsolete? These concerns are entirely valid, but the future is far from hopeless. In fact, it’s a challenge that can be met – with the right kind of preparation. And that preparation isn’t just about learning how to use AI – it’s about learning how to think about AI in a fundamentally different way.

-------

When most people talk about artificial intelligence (AI) , they focus on the “intelligence” part—as if AI were a person, or at least a machine capable of thinking. But I don’t think that that’s the most useful frame for thinking about AI. Yes, when AI is given a task, it may deploy patterns of reasoning similar to a human, but that doesn’t make it sentient or conscious. That doesn’t make it human. A more helpful way to conceptually think about generative AI is to treat it like something far more mechanical: a transfer function.

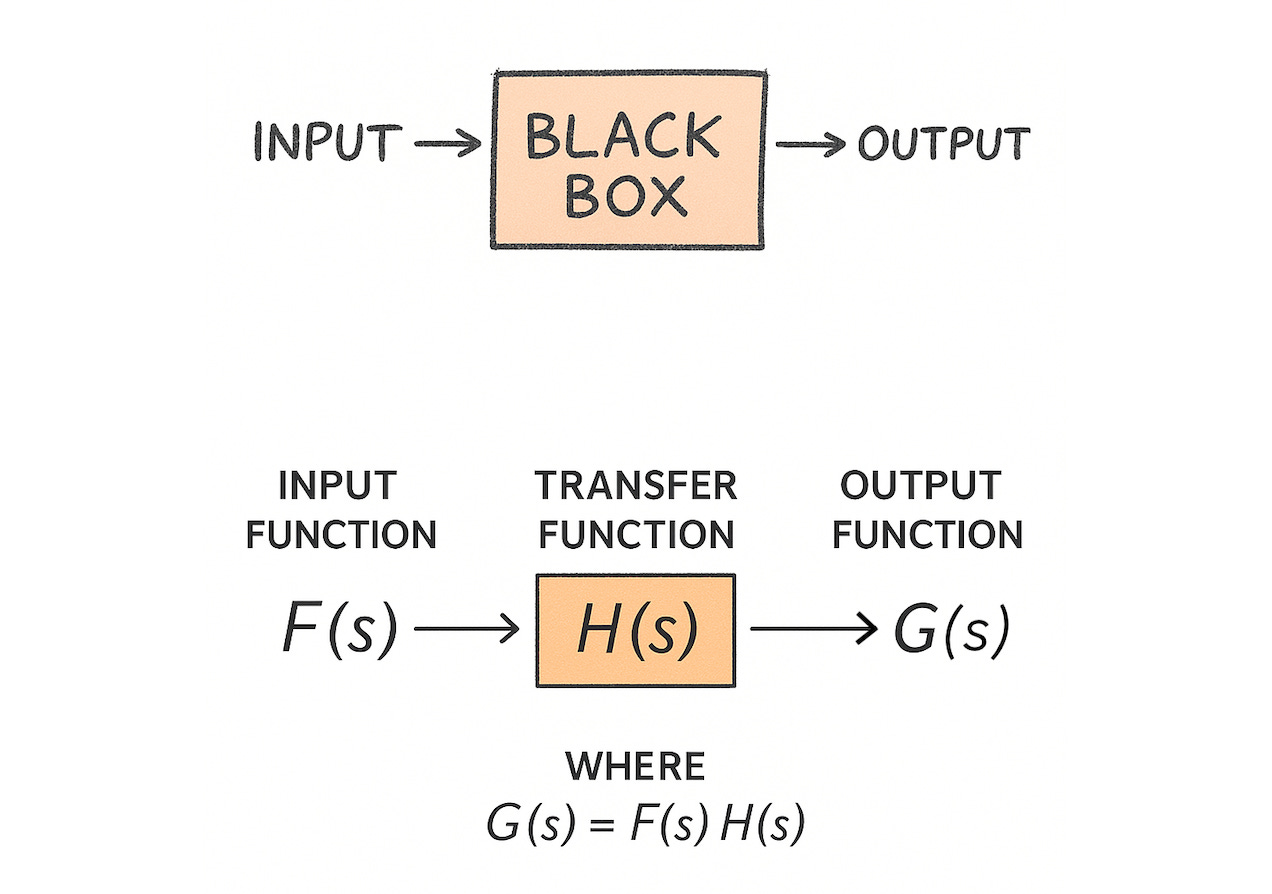

In engineering, a transfer function is a mathematical model that describes how a system transforms an input signal into an output signal. You feed it something, it operates on that input (often in a way that’s opaque or hard to inspect), and it gives you a result. It’s a “black box” that takes an input and somewhat predictably turns it into an output.[3]

FM radio offers a helpful analogy for how engineers think about transfer functions. When someone speaks into a microphone at a radio station, their voice produces an audio signal — let’s call that the input, F(s). To broadcast it over long distances, this raw signal needs to be modulated onto a high-frequency carrier wave. That’s where frequency modulation (FM) comes in — a process we can represent abstractly as a system or “black box,” which we’ll call H(s). This system takes in the raw audio F(s) and transforms it into a modulated signal G(s) that can travel through the air to your radio. On the receiving end, your radio performs the inverse operation: it demodulates G(s) to recover the original voice signal — another system we might call I(s).[4]

Now, rather than getting bogged down in the complex math of modulation and demodulation, engineers often model each of these steps as a transfer function: a simplified abstraction of how inputs are converted to outputs.[5]

This is how we should start thinking about generative AI. It takes in inputs—questions, prompts, problems—and produces outputs—answers, designs, solutions. And while it’s tempting to focus on how the model arrives at those results, and to attribute some sort of “intelligence” to the mechanisms by which it does, what actually matters is whether the outputs are useful. Whether they help us solve the problem we started with.[6]

So, what does this mean for the future of jobs?

The Transfer Function Employment Worldview

Let’s imagine a world where the tasks for almost all digital “work”—writing, designing, coding, planning, etc.—can be handled by an AI agent that behaves like a transfer function. You give it a prompt. It gives you an answer. If the answer is good, you use it. If it’s not, you revise the input or try another function.

In this world, human effort revolves around three core activities:

Defining the input (the problem): What exactly do we want the AI to solve? This is where we figure out what matters, what needs to be built, what people want or need.

Evaluating the output (the solution): Did the AI produce something useful? Is it accurate, safe, valuable, and aligned with what we were hoping to achieve?

Acting on the solution in the physical world: Many jobs still require moving in the real world—fixing HVAC systems, delivering food, comforting a child, performing surgery.

These three activities lead to three categories of human employment:

Category 1 Jobs: LLM Direction. These are the people who define problems and evaluate outputs. They might work in product design, law, healthcare, marketing, education, or policy. Their job is to steward human needs and ensure that machines are solving the right problems in the right way.

Category 2 Jobs: Physical Implementation. These are the people who act on the outputs. They do things that AI cannot (yet) do effectively in the physical world—like building physical goods, implementing physical world services, repairing machines, transporting goods, providing hands-on care, etc.

Category 3 Jobs: Data Stewardship. I would also add in a third type of job, which I call “data stewardship” for people who are tasked with feeding the right data to the right AI systems. In a world where access to information is uneven—because companies, governments, and individuals all have protected datasets—someone needs to manage, broker, and gatekeep data access. This role may shrink if data becomes open and universal, but in practice, it’s likely to be critical for a long time. This is the part that is staring back at the black box and tweaking the black box.

So how do all these categories work together in the transfer function analogy of Generative AI? Well, consider the schematic diagram below. Category 1 jobs straddle the transfer function… they help define its input F(s) and measure its output G(s). Category 2 jobs take the G(s) and convert it into practical things that people need in the world that can’t be easily automated. And Category 3 jobs help manage the function of the transfer function itself – they help set the data that go into creating H(s).

Examples

To illustrate how these jobs may work in action, let’s look at healthcare. Imagine a patient walks into a clinic with abdominal pain:

The doctor (a Category 1 role) listens to the patient, asks follow-up questions, and identifies what the likely problem is. That’s the first type of activity defined above—defining the input. Then the doctor might plug symptoms into a diagnostic tool powered by AI. The AI recommends a diagnosis and treatment. The doctor then reads the output and decides if it makes sense, possibly consulting a second AI or his/her own judgment. That’s the second type of activity—evaluating the output as good or bad.

If a blood test is required, then a phlebotomist is called in to draw blood (a Category 2 role). That’s the third type of activity—a physical action that the AI cannot do.

If the AI suggests surgery, and robots aren’t advanced enough, the surgeon performs the operation—again, the third type of activity.

Of course, someone might be needed to help manage which personal health data are used by the LLM in diagnosis, how these data are used, and whether that is ok. That’s an example of a Category 3 role (data management).

Another example focuses on Hollywood filmmaking:

In the future, AI might be able to write scripts, generate storyboards, create synthetic voices, edit footage, and even render entire scenes. But it still can’t decide why a story needs to be told a certain way. That’s the job of the director—a classic Category 1 role. They decide on the creative vision and then evaluate what the AI produces. Is the tone right? Is the pacing effective? Does it move the audience?

Meanwhile, the cinematographer might still physically handle real-world camera setups when shooting on location (if we haven’t fully digitized everything), and actors may still be needed for human connection and nuance that audiences value. These are Category 2 roles.

The data manager here might be the person curating massive datasets of film styles, audience preferences, or historical footage that the AI can pull from. He/she might also manage branding data and name-image-likeness (NIL) data, which require pre-authorization and contracting to use. A Category 3 role.

So even in a highly digitized world, we still need three categories of humans: those who guide and evaluate AI, those who do the physical things AI can’t, and those who help manage what data the AI can/cannot have access to.

What This Means for the Future of Jobs

This way of thinking helps us answer some tricky questions about the future of work. Rather than worrying about whether AI will “take our jobs,” the better question is: which category of job will each of us evolve into?

If you’re a writer, designer, or knowledge worker, your future might look less like “doing the work” and more like setting up the problem and checking the solution. You become a kind of creative director—not the artist, but the person guiding the art. Not the coder, but the one asking the system to code something, checking it, refining the brief.

If you’re a hands-on worker—plumber, nurse, electrician, chef—your work may remain remarkably unchanged for some time. The AI can suggest what to do, but it still can’t crawl under a sink, hold a hand, or cook an omelet (yet). That’s good news for Category 2.

And if you work in data infrastructure, legal compliance, or security, you may find yourself in a growing Category 3 role: the invisible hand that determines what the transfer function can see.[7]

Final Thought

In a world of transfer functions, the key skill is not learning how to out-compete the AI. It’s learning how to collaborate with it—to become the person who defines the right problems, evaluates the right solutions, and ensures the work leads to real-world impact. We’ll still need judgment, ethics, empathy, and taste. The difference is that we’ll use those qualities less for doing the task and more for steering the system. So, ask yourself: when the transfer function does the middle, what part do you want to play—upstream, downstream, or behind the scene? [8]

[1] Goldman Sachs Global Investment Research: Generative AI could raise global GDP by 7% (2023)

[2] World Economic Forum: The Future of Jobs Report 2023

[3] Note that transfer functions can be deterministic, where the output always matches the input for multiple trials, or non-deterministic (probabilistic), where the output is influenced by an element of randomness. LLMs used in Generative AI are best defined as probabilistic transfer functions because the same prompt can generate different responses. In LLMs, the randomness or creativity of the output is modulated by setting a parameter called "temperature".

[4] For the geeks out there, the “s” in the three functions above is a complex variable, and s = s + jω , wherein ω is the frequency of the signal (in radians), j is the square root of -1, and s is the real part of the variable. In most practical situations, s = 0.

[5] This is a gross simplification of transfer functions and their incredible value to engineers (which involve, among other things, simplifying the math used for time-based systems). But the point of this article isn’t’ to dive into electrical engineering control theory – it’s to use the transfer function analogy to help explain how to think about employment in the age of Generative AI.

[6] Of course, engineers will rightly note that this analogy has its limits. Transfer functions in control theory are typically linear, deterministic systems that convert signals from the time domain to the frequency domain. Generative AI, by contrast, is nonlinear, probabilistic, and doesn’t involve those kinds of time-to-frequency transformations. But the metaphor still holds at a conceptual level: in both cases, a system takes an input, performs a complex and mostly opaque transformation, and produces a usable output.

[7] Robert Capps of The New York Times Magazine recently published “22 New Jobs A.I. Could Give You”, where he shares his perspective on how human jobs may evolve in a Generative AI world.

[8] Thanks to Cansa Fis, Lily Lu, and Cam Hauser for providing valuable feedback on this essay!

…i think this is really sharp observation Adam and a good way to lessen some of the doomerism around the future of work…

I’ve been thinking about each of these roles myself, nicely put.

The diagnostic is so important, in my world (marketing), everyone is scrambling to use AI regardless of its compatibility. Last week, I had a conversation about automating a process with AI that didn’t need to exist. So many examples of this happening and it’s only going to get worse.

The people saying AI is ‘taking jobs,’ in a fear spiral also seem to miss the point that it’s dissolving responsibility from the humans making those decisions. Of course, some industries and use cases are going to create a competitive need to outsource to AI, but there are other examples I’m seeing first hand where people are outsourcing to AI based on incremental profit, removing creative skill for a weaker result. Is that AI taking jobs or people choosing to place AI in the job to save a few dollars and is/where is the line on management responsibility?

Something I’ve been pondering on.

Anyway, great essay